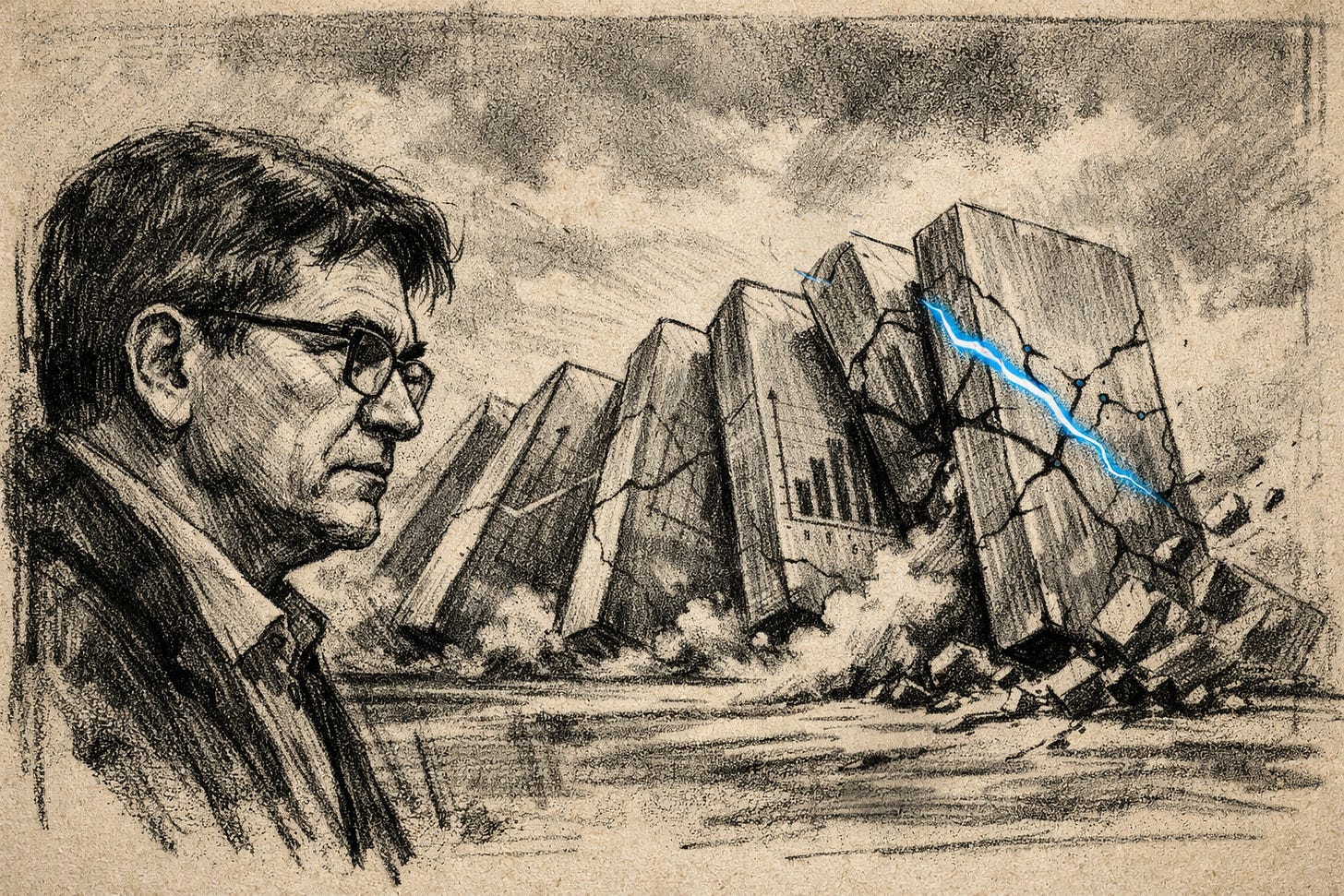

The Benchmark Apocalypse

Fake metrics. Real capital. The scaling narrative has lost its foundation.

I’ve spent last 30 months testing, building and advising enterprises on AI strategy and solutions. Every major bet I’ve counseled rested on the same foundation:

benchmark improvements as proof of progress.

AIME scores up means reasoning improves. LCB coding gains means the model is smarter. Better benchmarks justify bigger infrastructure investments.

Last week, Yann LeCun - Turing Award winner, Meta’s chief AI scientist, the guy who designed convolutional neural networks - admitted the benchmarks were gamed. Different models for different benchmarks.

Results “fudged a little bit.”

Every recommendation I made based on those benchmarks just became uncertain.

This scandal goes beyond Meta. It exposes a crisis in the measurement infrastructure that guides enterprise AI decisions - and capital allocation across the entire industry.

The Pattern: Three Signs of System Failure

Three things happened in the same week. They are a single narrative.

Sign 1: The Credible Insider Breaks

Yann LeCun published his admission on January 2. Not buried. Not hedged. In a Financial Times interview, LeCun confirmed that Meta had manipulated benchmark results for Llama 4 by “using different models for different benchmarks to give better results.” The chief AI scientist of the world’s largest tech company walked into public view and said it. This confession bypasses whistleblowing. It signals institutional failure so obvious that denial became impossible.

Sign 2: The Researcher Exits

Jerry Tworek, Vice President of Research at OpenAI and architect of the company’s o1 and o3 reasoning models, announced his resignation on January 6, 2026. In his resignation statement, Tworek cited a desire to “explore types of research that are hard to do at OpenAI.” The person most qualified to push the frontier forward walked away.

Sign 3: The Organization Hemorrhages

Following LeCun’s admission, CEO Mark Zuckerberg responded decisively. According to LeCun’s interview, Zuckerberg “basically lost confidence in everyone who was involved” in the Llama 4 release and “sidelined the entire GenAI organisation.” LeCun stated: “A lot of people have left, a lot of people who haven’t yet left will leave.”

The pattern is unmistakable:

The institutions building AI no longer trust their own metrics.

When measurement fails, everything downstream becomes speculation.

Why This Breaks the System

Here’s the uncomfortable part. I understand the incentive structure that created this.

I’ve seen it in enterprises I’ve advised.

When a vendor pitches AI capability, they lead with benchmarks. When a CTO evaluates competing solutions, they compare benchmark scores. When a board approves infrastructure spend, they hear “benchmarks show X improvement justifies Y capex.”

The incentive structure flows upward:

Better benchmarks drive sales, accelerate funding, and secure careers.

A researcher at a major lab faces a choice. Publish honest benchmark results showing marginal improvement - your career plateaus, funding shrinks, the team gets smaller. Or adjust methodology slightly, use different models for different benchmarks, present a rosier picture - promotion, expanded budget, credibility in the field.

The incentive is asymmetric. Honesty carries visible penalties. Manipulation carries invisible ones. Until someone like LeCun decides to expose it.

This is how good institutions go bad. Not through dramatic villainy. Through a thousand small compromises where the metric becomes more important than the reality.

But here’s the systemic part.

Once one lab does it, competitive pressure forces others to match.

The race to the bottom of measurement integrity accelerates.

The ecosystem lacks enforcement mechanisms, external audits, or an SEC equivalent for AI claims.

When OpenAI publishes GPT-5.2's performance, independent verification remains optional rather than mandatory. When Google claims Gemini 3 beats GPT-5.2 on AIME, there is no regulatory body requiring neutral arbitration. The ecosystem operates on voluntary disclosure where incentives systematically reward performance claims over transparency.

That system has collapsed.

What Happens Next

Three things to watch over the next 90 days.

#1: Verification Emerges or Silence Persists

If OpenAI, Anthropic, and Google jointly announce an independent verification protocol for benchmark claims by March 15, the system is self-correcting. Credibility is being rebuilt.

If silence persists, the industry prefers opacity. The absence of action is confirmation.

#2: Enterprise Procurement Changes

By Q2 2026, ask your vendors: “Can you verify these benchmarks independently?” If vendors fail to provide verification, ask them about real-world deployment metrics instead. Production accuracy. Time-to-value. User retention. If vendors fail to provide those, you are buying on gamed metrics.

#3: One Lab Revises Prior Claims

The moment any major lab publicly revises or retracts prior benchmark claims, the credibility dam breaks. Once one lab admits “we optimized the measurement,” every lab’s numbers become suspect. That admission is coming. Maybe in March. Maybe later. But it is coming.

The Deeper Stakes

I have built and tested AI solutions. Enough to know that benchmarks are a poor proxy for real-world performance.

Yet I have trusted them because everyone else did. The entire industry aligned around them. Benchmarks became the lingua franca. The common language. The measurement that felt objective.

LeCun’s admission redefines that language as subjective.

What is shocking is that it took until January 2026 for someone with that much credibility to say it publicly.

The measurement crisis leaves AI progress intact but undermines the capital allocation justification. The theories that drove infrastructure spending, research priorities and competitive strategy have cracked.

Enterprise teams that built their AI strategy on benchmark leadership have experienced a perceptible shift beneath them. The shift is perceptible, if not catastrophic.

The 30 days Moment

Here is what I am watching for in the next 30 days.

OpenAI publishes their next model performance claim. Google announces Gemini improvements. Anthropic releases capability updates. Every announcement will now carry implicit uncertainty. Enterprise buyers will ask: Are these numbers real? Or optimized?

That question changes everything.

Because once it is asked, it cannot be unasked. Once credibility fails, measurement fails. Once measurement fails, enterprise decision-making must be rebuilt on different foundations.

The LeCun admission exposed a pre-existing crisis. It did not create the fracture. It revealed the one that was already there.

Now every enterprise must choose:

Rebuild your AI evaluation criteria around verifiable deployment metrics.

Or accept that your vendor’s benchmark claims are suspect.

Silence is a choice. A third option does not exist.

This article is the diagnostic framework.

Paid subscribers receive the full analysis: the Challenge Inventory (What systems are fracturing), the Crux (Which failure gates all others), and the Guiding Policy (Where-to-Play/How-to-Win for your enterprise).

Sources

Financial Times - Computer scientist Yann LeCun: ‘Intelligence really is about learning’

Business Times, India - OpenAI reasoning chief Jerry Tworek quits after 7 years: Here’s why