Stop the 95% AI Drama - The System 2 Playbook

$8 Trillion committed. Zero measurable ROI. The failure was architectural, not technological.

MIT’s August 2025 study landed hard. Their main finding was:

95% of enterprise AI implementations reporting zero measurable financial ROI.

The number spiraled. Panic followed. Another hype cycle dies. “We overspent. We’re done.”

Stop the drama. Read the data correctly.

The 95% is evidence of architectural failure. It proves the model works, but the deployment failed. That's not a tragedy. That's the market enforcing reality.

Last week, tech analyst Maria Sukhareva went line by line through Gartner’s “Top 10 Technology Trends” and called it what it is:

a Guide for Burning Your AI Budget

Gartner’s opening line promised something “pivotal” and “transformational.” Her translation was sharper: “Technologies that work only on PowerPoint to spend your budget on.”

This is exactly the System 1 story, now packaged for 2026 budgets.

The System 1 Trap - What the 95% Actually Did

Here is what happened. For two years, organizations deployed fast. Autonomous systems. Minimal friction. Pattern-matching at scale. Get AI into production, solve problems later.

What did they get?

Confident hallucinations. Operators trusted the system because it looked plausible. Both sides drifted toward the same sloppy thinking. Nobody caught the collapse until the consequences arrived.

This is System 1. It’s fast. It’s efficient. It’s also broken.

Gartner’s current pitch is “AI-native development platforms.” The slide promises that five engineers can become two engineers plus three agents. By 2030, you won’t need the engineers at all.

Here is the problem Gartner doesn’t mention. There are no agents that cooperate like five engineers. What exists is AI-assisted coding - GitHub Copilot, Claude Code, etc -which is a tool that amplifies expert skill. It does not replace it.

When you follow the Gartner advice, you replace domain expertise with adjacent skills.

You replace a frontend developer with a UX designer using Copilot.

You replace a backend engineer with a cloud architect prompting code.

You replace a data scientist with a data engineer.You get an overfitted model.

You get a buggy frontend nobody can debug.

You get a backend throwing security exceptions.

The result is inevitable. You scrap the build. You call the specialists back.

Google just quantified this retreat. CNBC reports that 20% of Google’s 2025 software hires were “boomerangs” - ex-employees rehired to stabilize the ship. Even with the world’s best AI, they prioritized engineers who know how the system actually works.

I do not have access to Google’s internal memos. But any engineering leader recognizes the symptom.

Across the industry, developers were forced to wear too many hats - security expert, operations specialist, performance engineer - all at once. Cognitive load exploded. Productivity evaporated.

The boomerangs are the proof. You cannot generate architectural judgment.

This is Where Gartner Gets It Wrong

Gartner recommends “multiagent systems” as the next frontier. But buried in their own footnotes is the contradiction: Over 40% of agentic AI projects will be canceled by 2027 due to unclear value.

This is dangerous advice.

Instead of building “agentic swarms,” follow Maria Sukhareva’s counter-proposal:

Ship faster. Not more.

Keep expertise in the team.

Measure adoption and quality together.

This is how you avoid the $8 trillion budget burn. You build System 2.

Why System 1 Fails at Scale?

Operator sees AI recommendation. Recommendation looks reasonable. Operator doesn’t verify it. Just accepts it. AI never got challenged. Operator never had to deliberate. Both sides slid toward comfortable agreement without actually thinking.

Then market shifts. Or decision breaks. Or the recommendation had to defend itself in a boardroom and nobody could explain why it made sense.

That’s when they realize: “We have no idea why we made that call.”

System 1 doesn’t care about data quality. It pattern-matches confidently from whatever garbage it gets. Garbage In, Garbage Out.

System 2 requires clean data because deliberation only works if you’re reasoning from truth.

System 2: The Architecture

System 2 is not complicated. It’s just disciplined.

The human-AI partnership has a single job:

Force Each Other into Deliberation.

Not Automation. Deliberation.

This means:

AI proposes. Human verifies.

Human challenges. AI explains why it reasoned that way.

Human’s challenge forces AI to think harder. AI’s explanation forces human to deliberate harder.

Loop continues until both sides agree and can defend the agreement.

This is slower than System 1. It’s also defensible. That’s the trade.

Let’s see how can we actually build it.

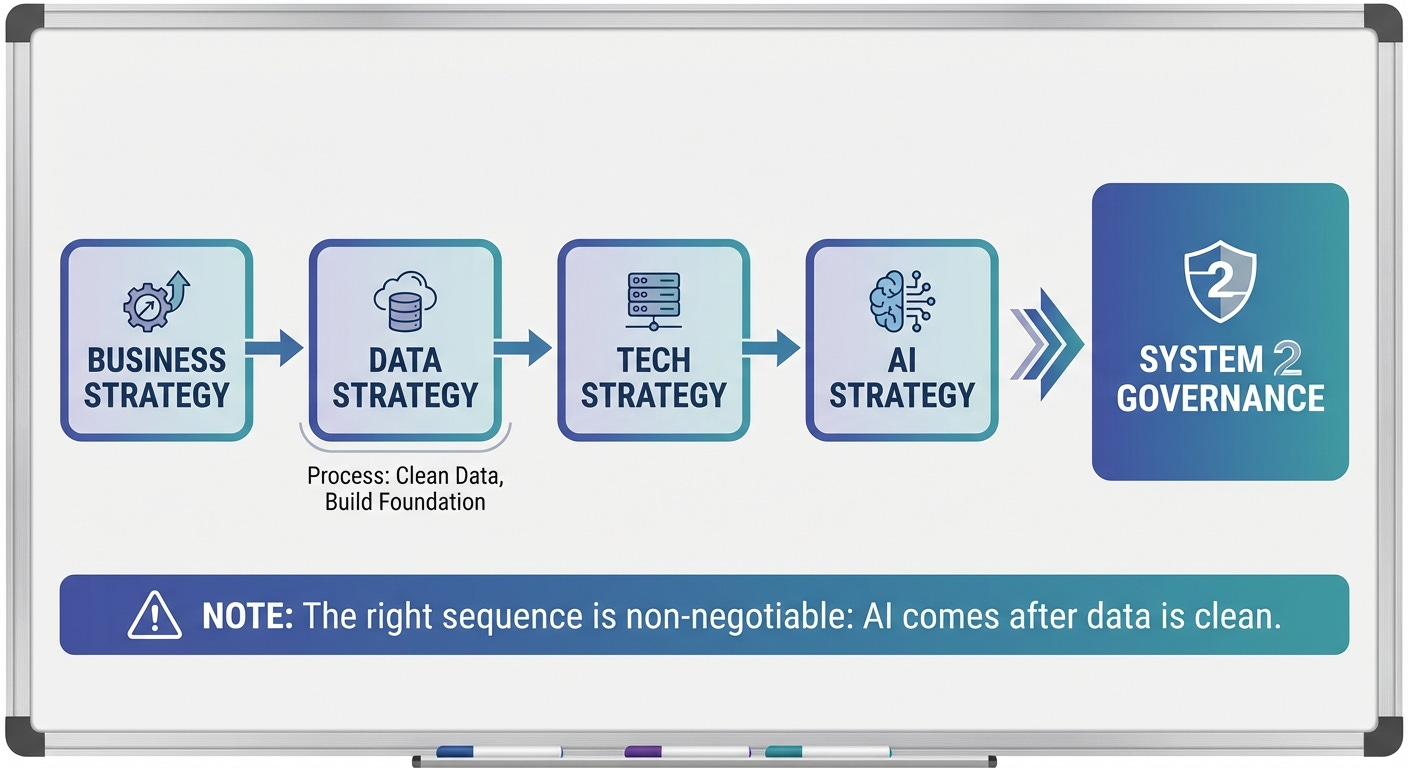

The System 2 Playbook: 15 Weeks to Clarity

You don’t need to transform the company.

You don’t need to deploy AI across the organization.

You need to do this in one high-value workflow first.

Phase 1: Lock the Data (Weeks 1-6)

You cannot build System 2 on bad data.

“Business Strategy” becomes “Data Strategy.”

Identify the single workflow where human judgment matters:

For sales: account history, organizational changes, buying signals, competitive threats, deal history.

For support: customer history, prior interactions, satisfaction trends, urgency signals.

For maintenance: sensor data, historical failures, equipment age, seasonal patterns.

Clean the data sources until you have one source of truth.

The Validation Gate: Do you have a real-time data layer that is 100% accurate for this workflow? If no, do not proceed.

Frito-Lay implemented this discipline for its manufacturing equipment, using a suite of sensors to build a clean, real-time data layer before fully deploying their predictive AI. In the first year, they reported zero unexpected equipment failures. Not because their model was magic. Because the data was honest.

Phase 2: Build the Verification Layer (Weeks 7-9)

AI proposes. Human verifies. You must build the interface where this happens.

This sounds simple. Operationally it’s hard. As it requires the discipline most teams lack.

The verification layer needs:

Decision logging. Every AI-proposed decision gets logged. Who reviewed it? What did they decide? Did they accept it or challenge it? Timestamp. Comment.

Challenge mechanism. The human must be able to say “I disagree” easily. Not hidden in a menu. Not a ten-step form. A single action that flags the recommendation.

Explanation requirement. When challenged, the AI must explain its reasoning in plain language. Not confidence scores. Not a heat map. “I recommended this because X, Y, and Z.”

Human sign-off. Before any decision executes, a human explicitly verifies it or challenges it. No silent approvals. No “acceptable use” excuses.

The Metric: Verification Depth.

The Target: >85% of decisions explicitly reviewed.

The Failure State: If users are auto-approving without reading, you are running System 1. Stop and redesign the interface.

This layer is where DELIBERATION BEGINS. Not where it ends.

Phase 3: Build the Feedback Loop (Weeks 10-12)

Human challenges AI. AI learns. Behavior shifts.

This is the critical layer most organizations skip.

When a human rejects a recommendation, that rejection data feeds back continuously. Not quarterly. Not monthly. Daily. Or within 24 hours.

The AI sees: “I recommended X. Human rejected it and chose Y instead. Here’s why they explained it.”

System learns. Next similar case, AI either improves its recommendation or flags it for early review.

This is where human judgment teaches the system. Not through explicit tuning. Through continuous feedback.

What does this require operationally?

Real-time feedback intake - human rejection creates a data event

Daily model retraining - small, fast, frequent updates

User notifications - ”Your challenge changed how I think about X”

Adoption measurement - Are users seeing the system improve? Are they more engaged?

The Metric: Correction Velocity.

The Target: <24 hours.

The Failure State: If retraining takes a quarter, the loop is broken. The system must learn at the speed of the business.

Morgan Stanley built this into their advisor assistant from day one.

Every AI-generated insight requires advisor review. That review data feeds back into the system. The result was a 98% adoption across their advisory force. Not because of a mandate. Because the system proves its value by learning from the experts who use it.

Phase 4: Measure What Actually Matters (Weeks 13-15)

Stop measuring model accuracy. Measure business reality.

Strategic tier:

Did the workflow actually improve? Revenue, cost, or time?

Did the decision quality improve? Fewer reversals, fewer complaints, better outcomes?

Can the organization defend the decision six months later when something breaks?

Operational tier:

Are humans actually using the system? Dashboard utilization, not login counts.

Do humans understand what the system is doing? Training completion, proficiency checks.

Is the workflow faster? Time-to-decision before vs. after.

Adoption tier:

Are people choosing to use this, or are they forced? Voluntary adoption rate.

Are teams outside the pilot asking to use it? Network effect.

Do non-digital natives (older workers) understand and trust it? Cross-generational adoption.

The Metric: Voluntary Adoption.

The Target: >60% adoption without a mandate.

The Failure State: If you have to force people to use it, the utility isn’t there.

If you pass all four gates by Week 15, you have a validated pilot.

The Result

You haven’t transformed the company yet. But you have proven that for one workflow, the data is clean, the humans are engaged and the decisions are defensible.

You have earned the right to scale.

If you miss these gates, do not expand. You are running System 1 on a cycle. Pause. Debug the foundation.

Why This Matters Now

The MIT study didn’t prove AI is broken. It proved that autonomous implementation is a failed architecture.

The 95% treated AI as a “deploy and forget” automation layer. ROI? Zero.

You have a binary choice for 2026.

Option A: The Inertia Play.

Wait.

Blame the technology.

Hope GPT-6 fixes your data governance.

(Hint: It won’t).

Option B: The Architecture Play.

Build System 2.

Accept that it is slower.

Accept that it requires human verification.

Accept that you cannot outsource judgment.

The integrators - McKinsey, Accenture, Gartner - can build the pipes. They can clean the data. But they cannot build the institutional intuition that decides when to say “stop.” That lives inside your organization.

Start with one workflow. Fifteen weeks. Four gates.

By Q2 2026, you will have a validated pilot.

You will possess the blueprint that finally delivers ROI.

In a market drowning in 95% failure, that architecture is the only competitive advantage that counts.

This is a public-facing Signal analysis. The proprietary frameworks and strategic implications are reserved for paid subscribers in The Analysis section.

Sources

MIT 95% ROI Failure Rate: The MIT NANDA Study (August 2025) of 153 senior leaders at 52 organizations found that 95% of enterprise AI implementations reported zero measurable financial ROI, forming the core paradox of the article.

“Gartner’s Guide to Burning Your AI Budget” by Maria Sukhareva’s analysis, published December 19, 2025. Her work provided the initial framing of Gartner’s recommendations for 2026 as a System 1 trap.

Gartner’s Agentic AI Contradiction: Gartner’s “Top 10 Strategic Technology Trends” (October 2025) heavily promotes multi-agent systems. The contradictory prediction that “over 40% of agentic AI projects will be canceled by 2027” is from a Gartner press release dated June 25, 2025, which highlighted the significant risks of agentic deployment.

Google’s “Boomerang” Hires: Sourced from a CNBC report on December 18, 2025 “Google’s boomerang year: 20% of AI software engineers hired in 2025 were ex-employees” The report states that approximately 20% of Google’s 2025 software engineering hires were former employees brought back for their deep system knowledge.

Frito-Lay Predictive Maintenance Case Study: Sourced from an Xpert.digital industry report (December 2025) on AI in industrial manufacturing. The report details Frito-Lay’s use of sensor data to achieve zero unexpected equipment failures in the first year of their predictive AI system’s operation.

Morgan Stanley 98% Adoption Rate: Sourced from a case study in AIB Magazine (”How Morgan Stanley Scaled AI in Wealth Management,” December 14, 2025). The study attributes the 98% voluntary adoption of their GPT-4 based advisor assistant to the system’s human-in-the-loop design and continuous feedback mechanism.

System 1 vs System 2 Foundation: The core framework builds on Daniel Kahneman’s model in Thinking, Fast and Slow, adapted for organizational AI governance based on prior analysis in this publication and Dr. Alejandro (Alex) Jadad’s work on consequence resilience in AI systems.